A data engineer’s guide to warehousing with Azure Synapse Analytics

Businesses need tools that can turn raw data into useful insights because data is what drives decisions. Microsoft’s Azure Synapse Analytics is a powerful cloud-based platform that helps businesses combine their data, make analytics easier, and get the most out of their information assets. This service changes the way businesses handle data and intelligence by combining data warehousing, big data processing, and advanced analytics. We’ll look at the structure, benefits, and real-world uses of Azure Synapse Analytics in this article. We’ll focus on how it supports data lakes, data warehouses, and machine learning workloads, with a focus on real-world examples and easy integration.

What is the purpose of Azure Synapse Analytics?

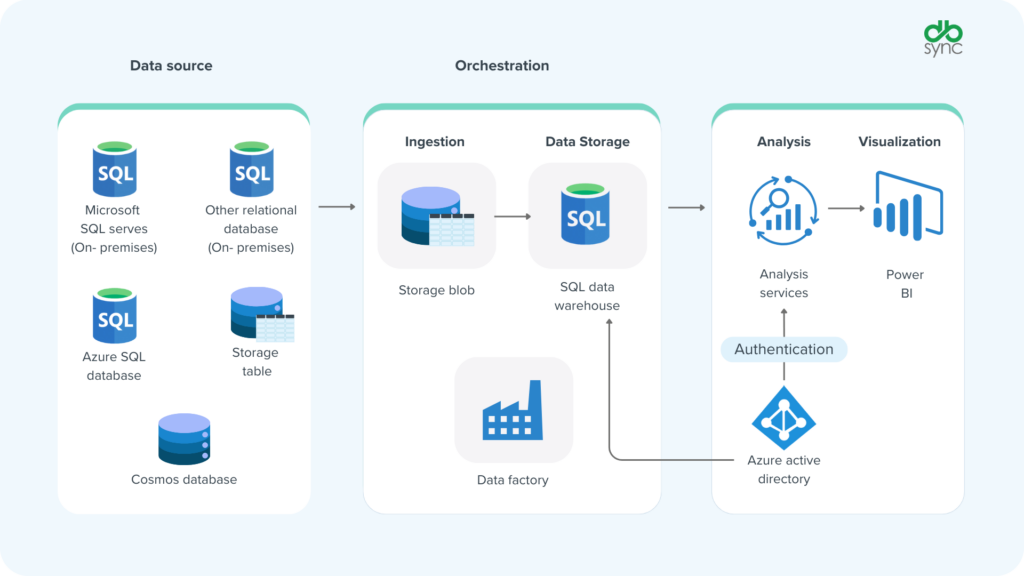

Azure Synapse Analytics is a cloud-based analytics service that brings together the best of data warehousing and big data processing into one platform. It is built on Microsoft Azure and lets businesses take in, process, and analyze data from a variety of sources, such as on-premises databases, cloud storage, and real-time streams, without the hassle of traditional data integration. It gives data engineers, analysts, and scientists a single place to work together and get insights by using tools like SQL, Apache Spark, and Azure Data Factory.

The platform’s architecture is made to be scalable and perform well, and it can work with both serverless and dedicated compute options. Azure Synapse Analytics gives you the flexibility to handle a wide range of workloads quickly and easily, whether you’re querying a data lake, building a data warehouse, or training machine learning models. It is a key part of modern data strategies because it can work with tools like Power BI and Azure Machine Learning.

Why should you Use Azure Synapse Analytics?

Businesses today have to deal with a lot of data and make sure it’s easy to get to, safe, and useful. Azure Synapse Analytics meets these needs by providing:

- Unified data integration: makes it easier to bring together data from different places, like SQL Server, Salesforce, or streaming platforms, into one place. This gets rid of the need for manual data wrangling, which saves time and cuts down on mistakes.

- High performance: Azure Synapse Analytics uses massively parallel processing (MPP) to quickly process large datasets, making sure that queries run quickly even when the workloads are complicated.

- Scalability: The platform can grow and shrink to meet the needs of your workload, whether you’re analyzing terabytes of data or doing analytics in real time.

- Advanced analytics: It lets you do predictive modeling, natural language processing, and other AI-driven insights with built-in support for Apache Spark and Azure Machine Learning.

- Enterprise-grade security: Data stays safe thanks to features like encryption, role-based access control, and integration with Azure Active Directory.

For instance, a retail business that wants to look at sales trends across different areas can use Azure Synapse Analytics to pull data from their data lake and make reports in minutes without having to write complicated code. This efficiency lets decision-makers focus on strategy instead of getting ready data.

Understanding the core components of Azure Synapse ecosystem

The design of Azure Synapse Analytics can handle the complicated data workloads of today. Here are its main parts, along with pictures showing what they do on the platform:

| Component | Description |

| Synapse SQL | Provides T-SQL-based querying for structured data in data warehouses or serverless queries on data lakes. |

| Apache Spark | Enables big data processing and machine learning tasks with support for Python, Scala, and R. |

| Azure Data Factory | Facilitates data orchestration and ETL (extract, transform, load) processes for seamless data movement. |

| Synapse Studio | A unified interface for managing pipelines, queries, and visualizations, streamlining workflows. |

| Power BI Integration | Connects directly to Power BI for interactive dashboards and data visualization. |

How they work together:

These parts are made to work together perfectly in one workspace. For instance:

- You can send data to ADLS Gen2 through Synapse Pipelines.

- You can then use Spark Pools to change and get this raw data ready in the data lake.

- You can then load the prepared data into a Dedicated SQL Pool for traditional data warehousing or use a Serverless SQL Pool to query it directly from ADLS Gen2.

- Synapse Studio keeps an eye on and manages all of these tasks.

- Finally, tools like Power BI, which works directly with Synapse, can be used to show the insights.

This method of integration breaks down silos and makes the whole analytics process easier, from collecting data to getting advanced insights.

This architecture can be used for a wide range of tasks, from basic data storage to more advanced analytics. For example, companies can automate data pipelines with Azure Data Factory, and Apache Spark can handle machine learning tasks on a large scale.

Understanding data storage and schema in Synapse SQL

To get the most out of Azure Synapse SQL Pools for speed and flexibility, you need to know how it stores and organizes data.

Decoupled storage and compute

Azure Synapse SQL Pools separate processing power (like DWUs or serverless engines) from data storage (like Azure Storage, which is usually Data Lake Storage Gen2). This lets you scale resources and save money because you can pause compute while data stays the same.

Underlying storage

Azure Data Lake Storage Gen2 (ADLS Gen2), which is built on Azure Blob Storage, is usually the main storage for both dedicated and serverless SQL pools. You can query files directly from Synapse SQL pools here (serverless) or store them in optimized internal storage (dedicated).

Schema flexibility: schema-on-write vs. schema-on-read

Azure Synapse works with both schema-on-write (where the schema is set up before the data is loaded, which is common for Dedicated SQL Pools) and schema-on-read (where the schema is set up when the data is queried, which is common for data lakes). This flexibility works with different kinds of data.

Key table types in dedicated SQL pool

- Regular Tables: These are the standard tables for storing structured data in a data warehouse.

- External Tables: Pointers to data files stored outside of the database (ADLS Gen2). They are helpful for direct querying and fast bulk loading with commands like COPY.

- Temporary Tables: Tables that are only used for a certain session for intermediate processing.

Optimizing performance with columnstore indexes

For analytics to work well, Clustered Columnstore Indexes (CCI) are very important. They store data in columns, which makes it easier to compress and speeds up queries by only reading the columns that are needed from large datasets.

The effective design of these storage and indexing strategies is a key area that DBSync can handle, ensuring your Azure Synapse environment is optimally configured for data ingestion and analytics.

Sync data from MySQL to Azure Synapse

Sync data from MySQL to Azure Synapse

Sync data from PostgreSQL to Azure Synapse

Sync data from PostgreSQL to Azure Synapse

Sync data from Dynamics 365 to Azure Synapse

Sync data from Dynamics 365 to Azure Synapse

Key benefits of Azure Synapse Analytics

Azure Synapse Analytics is unique because it can make complicated data workflows easier while still being very fast. Let’s take a closer look at its benefits:

- Streamlined data management: Centralizing data in a data lake or data warehouse makes it easier for businesses to manage their data. This enables easier access to data and breaks down silos.

- Cost efficiency: With serverless options, you only pay for the queries you run. With dedicated resources, you can be sure that critical workloads will always run at the same speed.

- Real-time insights: Companies can make quick decisions by analyzing live data streams, like IoT sensor data or customer interactions, thanks to streaming data support.

- Collaboration: The unified interface of Synapse Studio makes it easy for data engineers, analysts, and business users to work together.

- 360-degree customer insights: Businesses can improve sales funnels, get customers more involved, and find growth opportunities by combining data from platforms like Salesforce with operational data.

For instance, a bank could use Azure Synapse Analytics to combine transaction data with customer profiles. This would give them a full picture of how their clients act. As we talked about in data mining for fraud detection, this could help with targeted marketing campaigns or fraud detection models.

Best practices: Maintaining your Azure Synapse SQL warehouse

For Azure Synapse SQL Pools to work well and save money, they need to be maintained properly. These operational parts make sure that data warehousing runs smoothly and reliably for data engineers and administrators.

1. Managing workloads

Use Workload Groups and Classifiers to manage compute resources (DWUs for Dedicated SQL Pool, auto-scaling for Serverless) and put the most important tasks first. This makes sure that data ingestion processes get the resources they need to work well.

2. Managing statistics

For accurate query optimization and faster results, keep your statistics up to date using AUTO_CREATE_STATISTICS, AUTO_UPDATE_STATISTICS, and manual updates after large loads.

3. Strategies for indexing

For better compression and query performance, use Clustered Columnstore Indexes (CCI) on large fact tables. For smaller dimension tables, think about using rowstore indexes.

4. Distributing data

Choose hash, round-robin, or replicated distribution for tables with care. The right strategy, which dbsync helps clients figure out during ingestion, has a big effect on performance because it reduces data skew and movement during queries.

5. Best practices for loading data

Use fast bulk loading methods like the COPY statement or PolyBase, and always load data in groups. The Cloud Replication tool from dbsync is built to use these best practices to make data ingestion and resource use more efficient.

6. Backup and recovery from disasters

Take advantage of Azure Synapse’s built-in automated restore points, and think about using geo-redundant options for strong disaster recovery.

7. Monitoring & alerting

Use Azure Monitor, Synapse Studio, and custom alerts to keep an eye on performance and find problems before they happen so you can respond quickly.

8. Maintenance windows:

Set up maintenance windows for Dedicated SQL Pools so that service updates don’t cause too much trouble.

How Azure Synapse Analytics supports modern use cases

Azure Synapse Analytics is great for situations where you need to process and analyze a lot of data. Here are some important uses:

1. Data warehousing

Azure Synapse Analytics is a modern data warehousing solution that works better than older systems like SQL Server. Its Massively Parallel Processing architecture makes sure that queries run quickly, even on datasets that are petabytes large. Companies can keep structured data in a separate SQL pool or search for unstructured data in a data lake. This makes it great for sales analytics, inventory management, and financial reporting.

2. AI and machine learning

Azure Synapse Analytics supports the entire machine learning lifecycle, from preparing data to training models to deploying them, thanks to its integration with Apache Spark and Azure Machine Learning. For instance, a store could use it to predict how much stock it will need based on past sales data, which would make the supply chain run more smoothly. This fits with how enterprise AI platforms are going.

3. Analytics in real time

The platform can process streaming data, which lets you see things in real time, like tracking website traffic or looking at data from IoT sensors. This is especially useful for fields like manufacturing or logistics, where making decisions quickly is very important.

4. Visualizing data

Users can make interactive dashboards and reports when they connect to Power BI. For example, a marketing team could use data visualization techniques to see how well a campaign is doing by using natural language queries to get more detailed information.

Getting data into Azure Synapse: ELT, CDC, and Batch

For any kind of analysis, the most important first step is to get data into Azure Synapse Analytics. Azure has a lot of different tools for different types of data ingestion, from big historical loads to real-time streaming.

1. Batch data ingestion (for large and old datasets)

Great for moving a lot of data or for loads that happen on a set schedule.

Azure Data Factory (ADF) and Synapse Pipelines:

- What it is: A cloud-based ETL/ELT service that helps move data around. Synapse Pipelines are ADF features that you can use right in your Synapse workspace.

- How it works: It connects to more than 100 data sources (on-premises, in the cloud, or in SaaS) and loads data into Azure Synapse SQL Pools or Data Lake Storage. It does this by extracting, transforming, and loading data.

- Use Cases: Moving databases and loading data from different systems on a regular basis.

COPY statement in T-SQL says:

- What it is: A very optimized T-SQL command for loading a lot of data directly into Azure Synapse Dedicated SQL Pools.

- How it works: It quickly loads large datasets from Azure Data Lake Storage Gen2 or Blob Storage and can handle different file types (CSV, Parquet) and errors. A lot of the time, ADF does this.

- Use Cases: Loading big files when the data is already in Azure storage.

PolyBase:

- What it is: Technology in Azure Synapse Dedicated SQL Pools that lets you run T-SQL queries on data stored outside of Azure Data Lake Storage or Blob Storage.

- How it works: It’s important for ELT because it lets data be loaded into external storage, changed, and then moved into managed tables.

2. Streaming and real-time CDC data ingestion

Important for situations where you need quick answers from data that is always coming in.

Azure Event Hubs:

- What it is: A service that lets you stream data and events on a very large scale.

- How it works: It takes in millions of events per second from a variety of sources and serves as the entry point for real-time event data.

- Use Cases: Getting application telemetry, clickstream data, and IoT data.

Azure IoT Hub:

- What it is: A tool made just for connecting, monitoring, and managing billions of IoT devices.

- How it works: It makes a safe, two-way connection for IoT device data to the cloud.

- Use Cases: Getting data from sensors and vehicle telemetry.

Azure Stream Analytics:

- What it is: A service that analyzes live data streams in real time.

- How it works: It takes in data from Event Hubs or IoT Hubs, changes and combines it like SQL, and sends the results straight to Azure Synapse SQL Pools or Data Lake Storage for dashboards that update almost in real time.

- Use Cases: Detecting fraud in real time and getting live operational intelligence.

Apache Spark Pools (through Structured Streaming):

- What it is: Spark pools in Azure Synapse can work with data from streaming sources like Event Hubs or Kafka.

- How it works: It lets you do powerful, scalable real-time processing and complicated changes to streaming data before it gets to its destination.

- Use cases: machine learning on streaming data and advanced real-time analytics.

3. DBSync’s Cloud Replication (Optimized batch/CDC):

- DBSync makes it easier to get data from SaaS apps like Salesforce, D365/BC and databases like SQL, Oracle, MongoDB, etc. into Azure Synapse.

- Key Benefit: It automates extraction and loading by using efficient bulk loading methods. It cuts down on manual coding, gives you incremental updates (Change Data Capture – CDC) for continuous sync, and lets you change data to get it ready for Synapse analytics.

Picking the right method

The best way to ingest Azure Synapse depends on:

- Data volume: Batch methods usually work better with large amounts of data.

- Data velocity: Streaming solutions are needed for real-time data.

- Data structure: It’s usually easier to copy structured data directly into SQL pools, but unstructured or semi-structured data does better when it lands in Data Lake first.

- Transformation needs: ADF’s Data Flows or Spark may be needed for complicated transformations, but COPY can be used for simple loads.

- Source systems: For some sources, like Salesforce and ERPs, you can use direct connectors. For others, like Salesforce and ERPs, you need to write your own code or use a tool like dbsync.

DBSync plays a critical role by simplifying the complexities of both batch and real-time data ingestion. Our expertise ensures that data from your disparate source systems, including challenging application data, is efficiently and reliably moved into Azure Synapse, ready for advanced analytics and business intelligence. We bridge the gap between your operational systems and your analytical platform, enabling a true data-driven enterprise.

Automating multi-source ingestion with DBSync

Azure Synapse Analytics has a lot of powerful features, but businesses may run into problems with things like managing costs or integrating data. DBSync fixes these problems with solutions that are made just for you:

- Easier integration: DBSync’s Cloud Replication tool makes it easy to move data from SaaS apps and databases to Azure Synapse Analytics without having to write any code. It works with CDC to allow data to flow in both directions, make custom changes, and add new data in small amounts, making sure the data is ready for analysis.

- Cost optimization: DBSync helps keep costs down by reducing data duplication and giving you detailed reports on how resources are being used so you can stay within your budget.

- Easy to use deployment: With a no-code interface, DBSync lets businesses set up integrations quickly, even if they don’t have a lot of technical knowledge.

By leveraging DBSync’s platform, companies can maximize the value of Azure Synapse Analytics, ensuring smooth data workflows and optimized performance, as seen in discussions on big data for small businesses.

To sum up

Businesses that want to use their data to get ahead will find that Azure Synapse Analytics is a game-changer. Its unified architecture, which includes data lakes, data warehousing, and advanced analytics, makes it easy for businesses to turn raw data into useful information. This platform gives you the speed and flexibility you need to do well in a world where data is king, whether you’re optimizing sales, building machine learning models, or making dashboards that work in real time. With DBSync’s integration solutions, businesses can get past common problems, make data workflows more efficient, and get the most out of Azure Synapse Analytics. This will lead to smarter decisions and long-term growth.

FAQs

What is Azure Synapse Analytics?

Azure Synapse Analytics is a cloud-based service that integrates big data and data warehousing, combining Apache Spark and SQL analytics to derive insights from data lakes and stores.

How does Azure Synapse Analytics simplify data integration?

It unifies data from diverse sources like on-premises systems, cloud storage, and streaming platforms, enabling seamless ingestion, transformation, and querying without complex data wrangling.

What are the key benefits of using Azure Synapse Analytics?

Benefits include simplified data integration, high performance with massively parallel processing, enterprise-grade security, and easy integration with Azure services and tools like Power BI.

How does DBSync enhance Azure Synapse Analytics usage?

DBSync provides pre-built integrations for SaaS apps and databases, enabling rapid data replication, cost optimization, and user-friendly workflows without custom coding.

What types of analytics can Azure Synapse Analytics support?

It supports business intelligence reporting, machine learning model training, and interactive data visualization through integrations with Apache Spark, Azure Machine Learning, and Power BI.