How to Secure Data Replication: Controlling Frontend and Backend Vulnerabilities

Data replication ensures high availability, disaster recovery, and consistency across different systems. It needs to be adequately secured so that the possible risks against sensitive information will be mitigated. Here are some practical strategies to ensure data security during replication by focusing on controlling front-end data vulnerabilities and replication tools and protocols with security features.

Controlling Front-end Data Vulnerabilities

1. Data Masking

Data masking secures data through a transformation process whereby sensitive information is changed into artificial but authentic-looking data, making it very hard for that data to be misused should some form of interception occur during replication.

Static Masking:

Static data masking entails directly editing data values with anonymized values, either through encryption or any other anonymization technique that generates sets. It applies the same masking method for every user and application getting access to the data. It is normally done on a replica of your production database to avoid losing original data and ensure the replica is safely elsewhere in non-production environments.

Advantage: The most significant advantage of static data masking is that masked data will never give away its sensitive value.

Drawback: You have to maintain a masked version and an unmasked version of your data. This can get very expensive when considering the ever-increasing amounts of data held by an organization.

The two use cases for which static data masking excels are:

- Cloud migrations: When you mask the data before it gets migrated, sensitive data won’t get exposed during a migration. Moving masked data also ensures that wherever your cloud vendor’s shared responsibility model dictates protection for your data, you will not be liable for expensive data breaches in case some are caused.

- Creating and maintaining lower software environments: Every company will have a different environment for testing and developing software. Production data can be statically masked to make copies for development and test environments that provide access to realistic data while protecting sensitive data.

Example

Masking data before it gets migrated, sensitive data won’t get exposed during a migration.

Adding masking to data

Example

Dynamic Data Masking

Dynamic data masking enables sensitive data to be masked in real-time during user and application access. This is a potent feature since it executes role-based access controls and does not destroy the original data value. Flexibility is one of the most essential advantages of dynamic data masking. Masking policies can then be tailored to your needs or any compliance requirement. Dynamic data masking implies fine-grained control over data visibility based on who—consequentially—will have access to it and under what circumstances. One of the most significant drawbacks is that setting up and governing a masking policy takes time.

Dynamic data masking substantially increases the use cases to which your data can be applied. It is one of the best practices in data management to encrypt sensitive data in the database. Dynamic data masking decrypts and masks the data in real-time when it is in use. So, here are a few of those massive use cases wherein dynamic data masking being implemented can help protect sensitive data:

Real-time access control use cases include

- Dynamic data masking ensures that users can see only the sensitive data they are authorized to view. For instance, only the last four digits of a credit card number would be revealed to a customer support representative in an online transaction. This will allow a care coordinator, for example, to view only the last four digits of a social security number when it involves patient care. In both cases, this could grant a fraud investigator cleartext access to all respective information on the customer or patient in the file.

- Compliance use cases: Many regulatory compliance requirements—PCI, GDPR, CCA, HIPAA—dictate that an organization must provide access to sensitive data as required by the context of the data and permission granted to the type of user. Dynamic data masking enforces robust compliance based on role-based control.

- Use cases for Data Sharing: Dynamic data masking protects sensitive data being shared out-of-shop. In other words, it enables companies to collaborate or use the shared data but ensures that sensitive data is protected.

Check out this article to understand why data masking is needed.

2. Role-Based Access Control (RBAC)

Role-based access Control restricts system access to users authorized according to organizational roles. Allowing only users with appropriate permissions to perform replication tasks ensures such services are performed in accordance with organization-defined rules.

Key Practices:

- Define Roles and Permissions: Clearly define roles and permissions on all replication tasks. For example, an administrator might have complete control, but other users are given read-only access.

- Least Privilege Principle: Grant a user the fewest required permissions, thus minimizing the risk of potential unauthorized access.

- Periodic Review: Regularly review roles and permissions and update them to suit any changes that an organization may make, thus reducing potential risks.

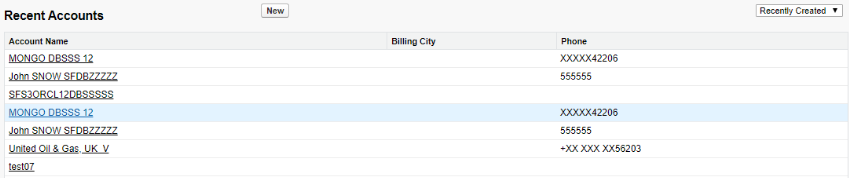

Example

Giving role-based access to users how they can deal with the data set

Here, users have access to only read and run particular commands on data.

3. Audit and Monitoring

Audit and monitoring are the processes by which replication activities are traced and logged to identify suspicious ones promptly, enforcing integrity and data accountability.

Key Practices

All data and your system adjustment interactions will be fully logged. This includes the logs for every access will include the user ID, the file name, the time of access, and the system activity.

Change logs record details of all insertions, adjustments, and removals in data. They make up the user ID, date and time of modification, type of modification, and values for “before” and “after.” Data replication usually involves logging start and completion times, data checksums, and success or failure at several servers.

Therefore, it is crucial to include not only the implementation of security and best practices but also the input of vulnerabilities found in the software and best practices to achieve perfection.

Backend Data Vulnerabilities

A vulnerability is defined as a flaw or weakness in software that allows an intruder to breach the target system with unauthorized access or commit any other unintended behavior. These weaknesses could be due to coding mistakes, design flaws, or even misconfigurations and may harbor relatively high-security risks. Vulnerabilities can be exploited to carry out sensitive information gathering, service disruption, or take over the affected system.

Types of Vulnerabilities

Design Flaws

The inbuilt design of the software makes it vulnerable to attack. Such defects will lie in the planning and design development phases and are relatively easily fixed with many redesigns.

For example, it allows insecure authentication mechanisms, flawed access control systems, and poor data encryption strategies. Much of correcting design flaws lies in understanding security principles and building them into the software upfront.

Coding Defects

These are disparities within the codes that, through their exploitation, lead to executing actions that might not be otherwise intended. Bugs in the code, a.k.a. coding errors, may lead to vulnerabilities that attackers can exploit. Regular code reviews, static analysis tools, and adherence to secure coding standards can all reduce such mistakes.

Configuration Vulnerabilities: Insecure system configurations.

The source of such vulnerabilities can be improperly or carelessly configured software, operating systems, or network devices. Some examples are default passwords, over-permissive access controls, and exposed administrative interfaces. Proper configuration management is a must, and configuration should be audited at appropriate periods.

Third-party vulnerabilities

Third-party vulnerabilities are the problems derived from the libraries or external services used by the software. Overdependence on third-party components has created issues related to them, thereby causing them trouble if proper care is not taken. It ranges from libraries, plugins, and APIs that are open-source. This would have entailed keeping third-party components up to date and being current on security advisories so that, in particular, known vulnerabilities could be avoided.

Exploitation:

Attackers are bound to leverage such a flaw to carry out a data-stealing practice, propagate malware, or disrupt services. Exploitation can involve using particular techniques to exploit a given vulnerability to maliciously achieve the goal. The level of risk carried by these techniques varies, from phishing attacks to deliver malicious payloads, exploitation of unpatched software to result in unauthorized access, and data encryption in a ransomware attack to extort money. From code injection to the exploitation methodology to privilege escalation.

The process involves the injection of the code of compromise into an application, which might be a basis for SQL or XSS compromise. Privilege escalation means that an attacker gains higher access about the intended access level. Usually, this is through the flaws that appear within the operating system or application permissions. Other methods include buffer overflows, man-in-the-middle attacks, and social engineering techniques.

Human Discovery

A vulnerability may be discovered manually, through automated scanning tools, or accidentally. The flaws that automated tools miss are detectable only by security experts who manually test the applications. Automated scanning tools will quickly scan software for common and normal vulnerabilities and misconfigurations, hence providing the first-line assessment.

Some vulnerabilities can sometimes be found accidentally or incidentally by the user himself during system maintenance. When discovered, they are often added to databases such as the Common Vulnerabilities and Exposures (CVE) program.

The CVE system allows for standard familiar-based identification and descriptions of vulnerabilities to support organizations in tracking and eventually managing them. The NVD, together with vendor security advisories, is one of the primary resources of repositories and platforms providing support in vulnerability management.

Sync data from QuickBooks Desktop to QuickBooks Online

Sync data from QuickBooks Desktop to QuickBooks Online

Sync data from QuickBooks Online to MySQL

Sync data from QuickBooks Online to MySQL

Sync data from QuickBooks Online to IBM DB2

Sync data from QuickBooks Online to IBM DB2

Impact of data vulnerabilities:

Software vulnerabilities matter because they might lead to financial loss, data breach, or reputation damage.

- Organizations can be hammered by high monetary costs for data breaches, legal penalties, and even lose of businesses.

- Data breaches could lead to sensitive information being stolen, including personal data, financial records, and intellectual property.

- Such damage to reputation ruins clients’ credibility towards the organization and may affect the long-run success.

- Possible vulnerabilities may be the compromise of physical human safety in critical systems.

- Critical dependencies may also be affected by vulnerabilities in the health system, the industrial control system, and the transportation network.

Mitigating backend vulnerabilities

Security software flaws must be corrected with updates or patches.

- The simplest of these practices is updating and patching the system so that it closes this security gap and prevents it from being exploited.

- They must implement a well-articulated patch management process to ensure that all software and systems are kept current.

- They can be fixed through secure coding practices and rigorous testing.

- It can eliminate or detect vulnerabilities using secure coding guidelines, code reviews, and testing at the earliest possible stage of the development process.

Enhanced Vulnerability Management Best Practices

Organizations should implement vulnerability management to assess and address software vulnerabilities regularly.

1. Implement a Comprehensive Vulnerability Management

- Organizations should establish a robust vulnerability management program to continuously assess and mitigate software vulnerabilities.

- This proactive approach is crucial to identifying, prioritizing, and addressing security risks before malicious actors can exploit them.

2. Systematic Identification, Prioritization, and Remediation

- A well-structured vulnerability management program systematically identifies vulnerabilities across the organization’s software and systems. This includes regularly conducting vulnerability assessments, penetration testing, and security audits to uncover potential weaknesses.

- Once identified, these vulnerabilities should be prioritized based on risk level, enabling the organization to address the most critical issues first.

3. Essential Components of a Vulnerability Management

- Regular vulnerability assessments should evaluate the security posture of software and systems. Penetration testing should be employed to simulate real-world attacks and identify potential entry points for attackers.

- Security audits are necessary to ensure compliance with security policies and standards and to identify gaps in the existing security framework.

4. Developer Training for Secure Code Practices

- Developers are on the front lines of software security. It is essential to provide them with ongoing training to write secure code and to recognize common vulnerabilities.

- Training should not be a one-time event but an ongoing process that includes updates on the latest security threats and secure coding practices.

5. Ongoing Education on Emerging Threats

- Continuous education is key to maintaining a high level of security awareness among developers. This includes participation in secure coding workshops, security conferences, and access to up-to-date resources on emerging threats. Such initiatives help developers stay informed and equipped to implement the latest security measures in their code.

6. User Awareness and Software Hygiene

- Users also play a critical role in maintaining security. They should be educated about the importance of being cautious with the software they install, ensuring that they download applications from trusted sources, and applying updates as soon as they are available.

- Timely updates often include patches for newly discovered vulnerabilities, making them crucial to maintaining software security.

7. User Education on Security Best Practices

- Educating users on security best practices, such as recognizing phishing attacks, avoiding social engineering tactics, and practicing safe online behavior, is vital in reducing the risk of exploitation.

- Awareness campaigns, training sessions, and clear communication about the importance of these practices can empower users to contribute to the organization’s overall security posture.

By following these best practices, organizations can create comprehensive vulnerability management that addresses current security threats and evolves to meet new challenges in an ever-changing digital landscape.

Conclusion

Effective replication of data allows for operability and availability, but the integrity and security of the replicated data against visible vulnerabilities should be carefully ensured. Data masking techniques—in particular, static and dynamic—aim to protect sensitive information from external agents during replication processes and, therefore, are highly feasible in this respect. RBAC further improves security by limiting system access to authorized entities exclusively, as determined by predefined roles and permissions. Strong audit procedures and monitoring are also essential for quickly identifying and taking appropriate action in the event of illicit activity. If you’d like to learn how DBSync masks data

It has also underlined that software vulnerabilities can cause various hazards, including those related to third-party components, configuration flaws, and design flaws. If appropriate mitigation techniques, such as consistent patch management, secure coding standards, and thorough vulnerability evaluation, are not implemented, all of them can be dangerous.