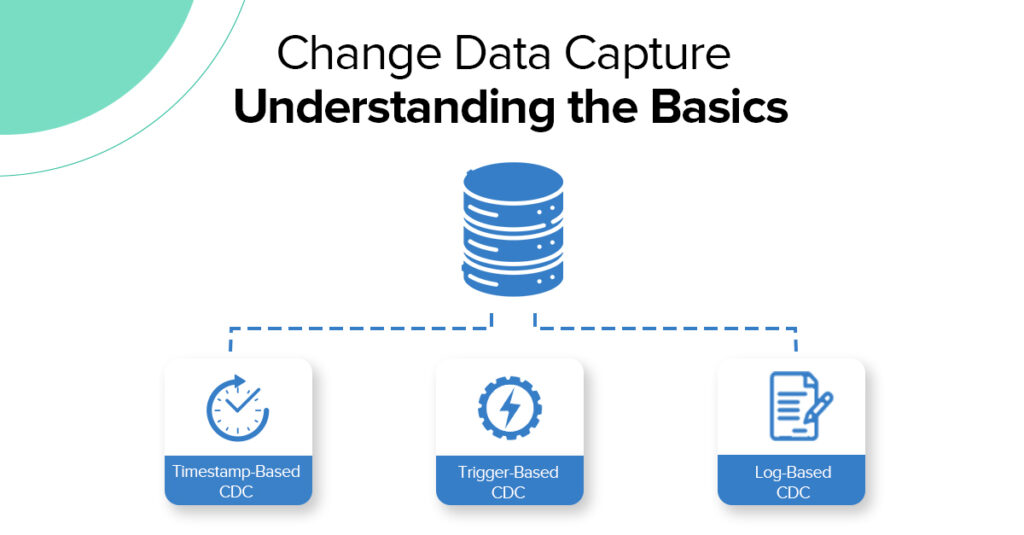

Change Data Capture – Understanding the Basics

In today’s digital age, having timely and accurate information is crucial for businesses to make informed decisions. Change Data Capture (CDC) is like a helpful assistant that keeps track of your real-time data, ensuring you’re always in the loop. This guide will explain why CDC matters and how it can boost your business.

What is Change Data Capture (CDC)?

CDC helps companies track changes in their databases. It spots what’s been altered or removed and shares that information with other company parts. This ensures everyone’s on the same page and looking at the same information. CDC saves time by only capturing the changes, making everything run smoother and faster.

Why is the CDC important?

Having access to fresh information is crucial for making informed decisions. CDC monitors your database, letting you know about changes as they happen. This means you can base decisions on the latest data and keep everything consistent across your systems.

Implementing CDC helps with efficient data replication and real-time analytics, but a few points need to be considered.

Some of the benefits of using CDC include

- Real-time data availability

The most significant benefit of using change data to capture data is that it ensures data consistency across different systems. Every change made in the source database is immediately replicated in the target database.

You can try it with our Snowflake Integration tool by requesting a demo and starting a free 14 days trial.

Move data within a few minutes from a data source to the target

- Improved efficiency

Change data capture can reduce the amount of data that needs to be transferred and processed by replicating only the changes made to the source database rather than the entire database.

Capture only data that are changed on Source

- Cost Saving

Another benefit of using these change data capture methods is that it may assist in reducing costs.

Changing data capture can help reduce bandwidth and storage costs by using database performance and reducing the amount of data that needs to be transferred and processed. This can help businesses save money over time, making it a more cost-effective solution.

- Faster time to insight

Reducing the time it takes to update data in all the destination databases helps organizations gain insight into data in near real-time, reducing the latency caused by legacy data replication methods.

Cons of CDC

Every functionality has pros and cons, and so does Change data capture (CDC). It is very important for any organization implementing any technology to know its benefits and cons; hence, here are a few points that need to be considered while implementing the CDC.

- Resource Utilization

CDC helps an organization to reduce processing overheads, but at the same time, it can increase resource utilization of storage and network bandwidth, especially in the environment where there a high volume of transaction data is produced

- Dependency of Database Support

CDC sometimes can be hindered by the limitation or compatibility of different databases, which can cause issues in adapting the CDC by an organization

- Concern about capturing sensitive data

An organization needs to track data while implementing the CDC. If this is not done, it might capture sensitive information edited on databases and cause organizations to deal with compliance issues.

Best Practice for Implementing CDC

Despite all challenges, best practices are followed when implementing any technology. This helps organizations develop and improve their data efficiency and decision-making capabilities.

- Identify critical data sources: Identify the essential data that needs to be updated in real time and implemented while using CDC. All data is not business-critical data, and implementing CDC to all data sources can unnecessarily increase storage and hinder insight, as non-necessary data can change the result.

- Maintain scalability: It is essential to ensure that your CDC can scale up with your changing data needs and ever-growing data, which can fuel your organization.

- Ensure integrity: Data integrity is crucial for any business, and organizations should ensure its maintenance while moving data from one system to another.

- Monitor and Optimize performance: One of the most important things to be considered while implementing CDC is to make sure that you monitor the data changes and performance of your CDC implementation, as it is essential to keep track of the changes happening as it will impact all the connected databases and can cause data discrepancies.

How does the CDC work?

CDC monitors your database for changes. When it spots something new, it determines what information is related to the change and prepares it to share with other parts of your business. It ensures everything’s accurate and sends it to the right place without slowing down your primary database.

Timestamp-based Change Data Capture

leverages a table timestamp column and retrieves only changed rows since the data was last extracted from the same database.

- Pros

- The simplest method to extract incremental data with the CDC

- At least one timestamp field is required for implementing timestamp-based CDC

- The timestamp column should be changed every time there is a change in a row

- Cons

- There may be issues with the integrity of the data using this method. So, if a row in the table has been deleted, there will be no DATE_MODIFIED column for this row, and the deletion will not be captured.

- Can slow production performance by consuming source CPU cycles

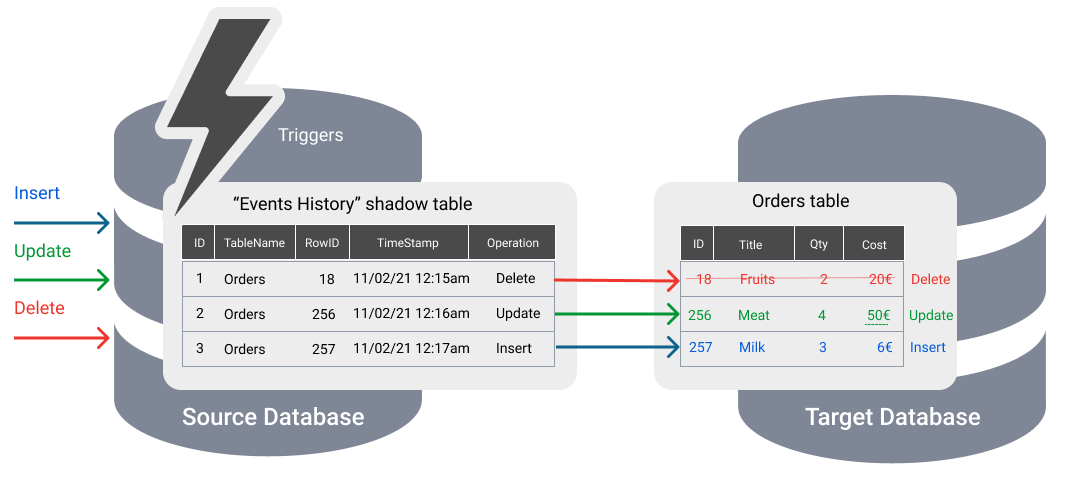

Triggers-based Change Data Capture

Defines triggers and lets you create your change log in shadow tables.

- Pros

- Shadow tables can store an entire row to track every column change.

- They can also store the primary key and operation type (insert, update, or delete).

- Cons

- Increases processing overhead

- Slows down source production operations

- Impacts application performance

- It is often not allowed by database administrators

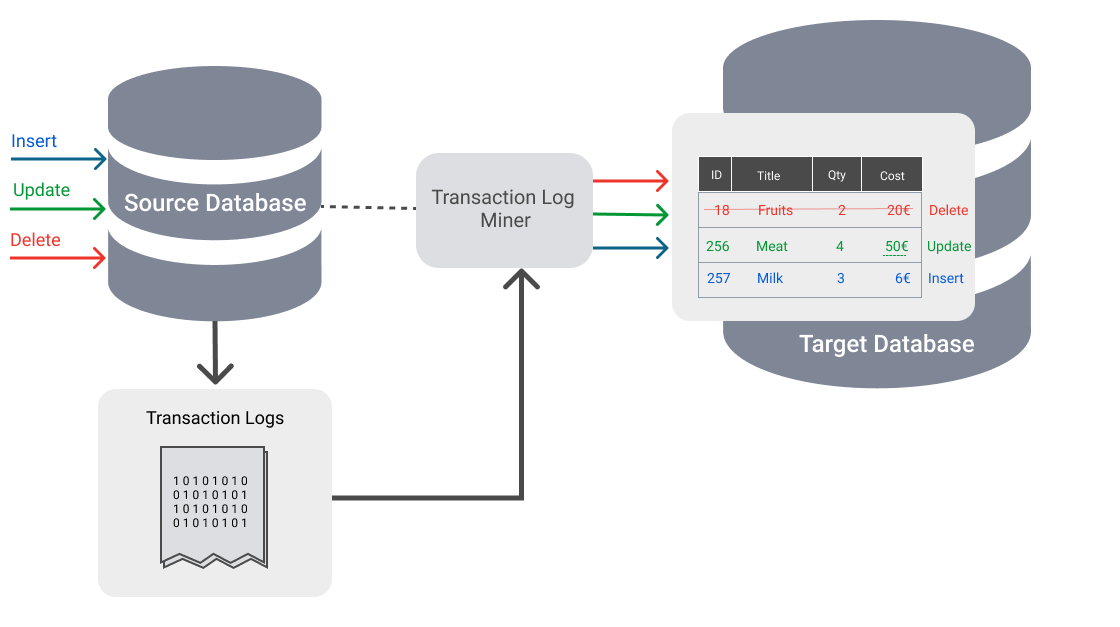

Log-based Change Data Capture

Transactional databases store all changes in a whole database transaction logs a log that helps the database recover in a crash. Log-based CDC reads new database transactions from source databases’ transactions, including inserts, updates, and deletes. Changes are captured without making application-level changes or scanning operational tables, adding additional workload and reducing source systems’ performance.

- Pros

- It takes advantage of the fact that most transactional databases store all changes in a transaction (or database) log to read the changes from the log.

- The preferred and fastest CDC method

- Non-intrusive and least disruptive

- Requires no additional modifications to existing databases or applications

- Most databases already maintain a database log and are extracting database changes from it

- No overhead on the database server performance

- Cons

- Some tools require an additional license

- Separate tools require operations and additional knowledge

- Primary or unique keys are needed for many log-based CDC tools

- If the target system is down, transaction logs must be kept until the target absorbs the changes

Storing Changes

Once captured, changes are stored in designated repositories, such as change tables or message queues. This ensures a centralized location for processing and application.

Applying Changes

Changes changed data that are then used to target systems or processed by applications, enabling seamless data synchronization.

Advanced CDC Techniques and Considerations

- Change Data Streaming: Change data streaming involves processing and analyzing real-time data changes as they occur. Technologies like Apache Kafka are commonly used for this purpose.

- Microbatching: Microbatching involves processing data in small, manageable batches to balance real-time processing with resource efficiency.

- Change Data Quality: Ensuring data quality in change data is essential for maintaining its accuracy and reliability.

- Change Data Transformation: Transforming change data involves preprocessing it before loading it into target systems.

These examples illustrate various advanced CDC techniques and considerations in Java, including change data streaming, micro batching, data quality assurance continuous data replication, and data transformation. Each example demonstrates how to implement these techniques using Java programming language.

Change Data Capture (CDC) Use Cases

CDC captures changes from the database transaction log and disseminates them to various destinations, encompassing cloud data lakes, data warehouses, or message hubs. Traditionally, CDC has been instrumental in database synchronization, while modern applications extend its utility to real-time streaming analytics and cloud data lake ingestion.

Traditional Database Synchronization/Replication

Historically, managing data changes involved batch-based replication methods. However, with the surge in demand for real-time streaming data analytics, continuous replication mechanisms facilitated by the CDC are necessary. By enabling continuous replication on smaller datasets and addressing only incremental changes, the CDC obviates the need for offline database copying, ensuring uninterrupted synchronization between databases.

Modern Real-Time Streaming Analytics and Cloud Data Lake Ingestion

Managing data currency in cloud data lakes poses a significant challenge. Modern data architectures leverage CDC to seamlessly ingest data into data lakes via automated data pipelines only, circumventing redundant data transfers and focusing solely on data changes. Integration with Apache Spark enables real-time analytics, supporting advanced use cases such as artificial intelligence (AI) and machine learning (ML).

ETL for Data Warehousing

CDC optimizes Extract, Transform, and Load (ETL) processes by focusing solely on data changes, minimizing resource utilization, and reducing processing times. It streamlines ETL workflows by extracting, transforming, and loading data changes in small batches at a higher frequency, mitigating downtime and associated costs. Moreover, the CDC alleviates the risk of prolonged ETL job execution, ensuring data integrity and operational efficiency.

Relational Transactional Databases

CDC facilitates real-time synchronization between source and target relational databases, by capturing transactional data manipulation language (DML) and data definition language (DDL) instructions. By replicating these operations in real-time, CDC ensures consistency between databases and enables seamless data propagation across systems.

You can check out also the this case study to learn more

How can CDC help my business?

CDC offers several benefits for your business:

- Real-Time Data: CDC monitors changes in the source database, ensuring all connected databases have the latest information in real time.

- Stay Compliant: Compliance is essential for any organization, and CDC helps by capturing changes as they occur.

- Save Time and Be More Efficient: CDC automates much of the data management work, freeing up your team to focus on growing your business.

- Make the Digital Transformation: CDC helps transition your business online by ensuring smooth data flow between platforms, making better decisions, and staying ahead of the competition.

- Make Better Decisions: With CDC, you can analyze your data to understand your customers and market trends better, leading to business growth and improvement over time.

Sync data from Dynamics 365 to Dynamics NAV

Sync data from Dynamics 365 to Dynamics NAV

Sync data from Salesforce to AWS Redshift

Sync data from Salesforce to AWS Redshift

Sync data from Salesforce to Jira

Sync data from Salesforce to Jira

Major Roadblocks and Considerations in CDC

Write a short introductory paragraph explaining the content below

Data consistency and integrity issues

Ensuring real-time data accuracy: Real-time data accuracy is crucial in CDC to ensure that the most recent changes are captured and propagated immediately. This involves using techniques like low-latency data streaming and real-time synchronization to keep data current and reliable.

Managing data duplication errors: CDC systems need robust mechanisms to detect and eliminate duplicate records that can arise from multiple change captures or erroneous transactions. Effective deduplication techniques and reconciliation processes are essential to maintain a single source of truth.

Synchronizing data across diverse systems: CDC must handle the complexities of synchronizing data across heterogeneous environments, which may include different databases, applications, and platforms. This requires robust mapping, transformation, and integration strategies to ensure seamless data flow and consistency.

Performance considerations

Minimizing impact on source systems: To avoid disrupting the primary operations of source systems, CDC techniques should be designed to be non-intrusive. This can involve incremental, data capture methods, change tracking mechanisms, and offloading processing tasks to separate systems.

Optimizing data processing speeds: CDC systems need to process captured changes quickly to keep up with high-velocity data environments. Techniques such as parallel processing, in-memory computing, and efficient data pipeline designs are critical for optimizing data processing speeds.

Balancing load to ensure system responsiveness: Load balancing is essential in CDC to distribute workloads evenly across systems, prevent bottlenecks, and ensure that both the source system and target systems remain responsive. This involves dynamic resource allocation and monitoring to manage varying data loads effectively.

Security and compliance considerations

Protecting data during transfer: Ensuring the security of data during transfer is critical in CDC. This includes using encryption methods, secure communication channels, and integrity checks to prevent data breaches and unauthorized access during data movement.

Adhering to data privacy regulations: CDC systems must comply with privacy laws and regulations such as GDPR, HIPAA, and CCPA. This involves ensuring data capture, storage, and transfer practices protect user privacy and meet legal requirements.

Implementing robust access controls: CDC systems should implement robust access control mechanisms to prevent unauthorized access and ensure data security. These include role-based access controls, authentication protocols, and audit trails to monitor and manage access to data.

Conclusion:

The CDC is a helpful tool for businesses to stay competitive. By understanding the basics of the CDC, you can make the most of your data and make better decisions faster. Try our SQL-Server Integration Platform with a 14 days Free trial.

Look for more posts where we’ll dive deeper into CDC techniques and best practices.

FAQ

What is the difference between SCD and CDC?

SCD is primarily concerned with tracking and managing changes in dimension data within a data warehouse over time, while CDC is focused on real-time detection and propagation of changes in source data to ensure timely updates across multiple data systems together.

What are the different types of CDC?

Log-Based CDC: Monitors database logs (e.g., transaction logs) to detect changes.

Trigger-Based CDC: Uses database triggers to capture changes as they occur.

Timestamp-Based CDC: Compares timestamps to identify changes

What is the difference between ETL and CDC?

ETL and CDC are complementary techniques used in data integration. ETL is ideal for batch processing and complex transformations, while CDC is essential for real-time data synchronization and ensuring that target systems are up-to-date with the latest changes from source systems.

![What data engineers look for in data replication software [A Buyer’s Guide] 13 data replication software](https://www.mydbsync.com/wp-content/uploads/2025/08/image-710x399.png)