Automate, combine, and speed up your document workflows with Intelligent Document Processing (IDP)

You’re not the only one who has reviewed numerous bills, purchase orders, or contracts in various formats and thought, “How am I going to get all this information into Salesforce, Dynamics 365, NetSuite, or my ERP without going crazy?”

We get what you’re saying. Most businesses still require manual data entry to process documents from various sources, including emails, vendor portals, scanned copies, and other sources. It’s boring, prone to error, and, to be honest, it’s a huge waste of your team’s time and energy.

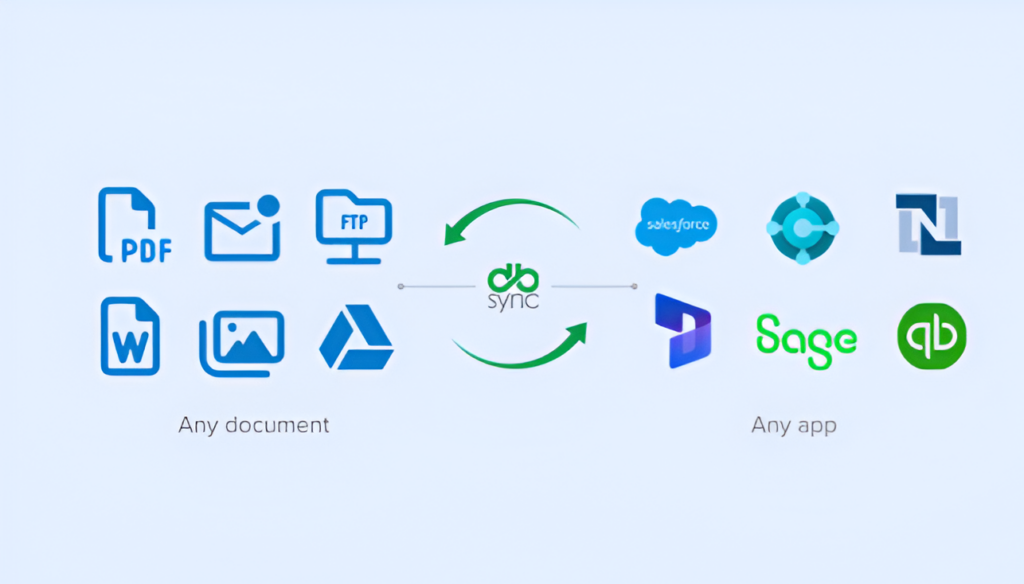

That’s why we developed the Intelligent Document Processing (IDP) solution. It automatically gets the information you need from any format and sends it to the systems you use every day. This makes it easier to work with documents. No more copying and pasting. You won’t have to search for information that is no longer available. Automation that works well and is easy to use, so your team can focus on what matters most.

Why do we need to change the way we handle documents

Let’s be honest: filling out forms by hand is slowing down businesses. Teams spend a significant amount of time importing data from scanned images and PDFs into business systems. This not only makes people less productive, but it also increases the likelihood of mistakes and problems with following the rules.

As businesses grow larger, they require an increasing number of document types.

Businesses today need a way to:

- Be able to handle a variety of documents and sources

- Get the information out quickly and correctly

- Works well with all the apps you use for business

- As your business needs grow, you can scale up.

- That’s what DBSync’s document processing with AI is supposed to do.

What does “IDP” mean?

Intelligent Document Processing, or IDP, is an alternative method for extracting data from any type of document and directly integrating it into your business systems. It does this by using AI, OCR, and automation. It quickly converts unstructured data, such as PDFs, Word documents, scanned images, or handwritten notes, into useful information.

Key features

- Supports many formats, such as PDFs, images, and more

- AI-Powered Extraction: Thanks to advanced OCR and machine learning, it is very accurate.

- Seamless Integration: It can work with your favorite apps, like Salesforce, Dynamics 365, Business Central, and others.

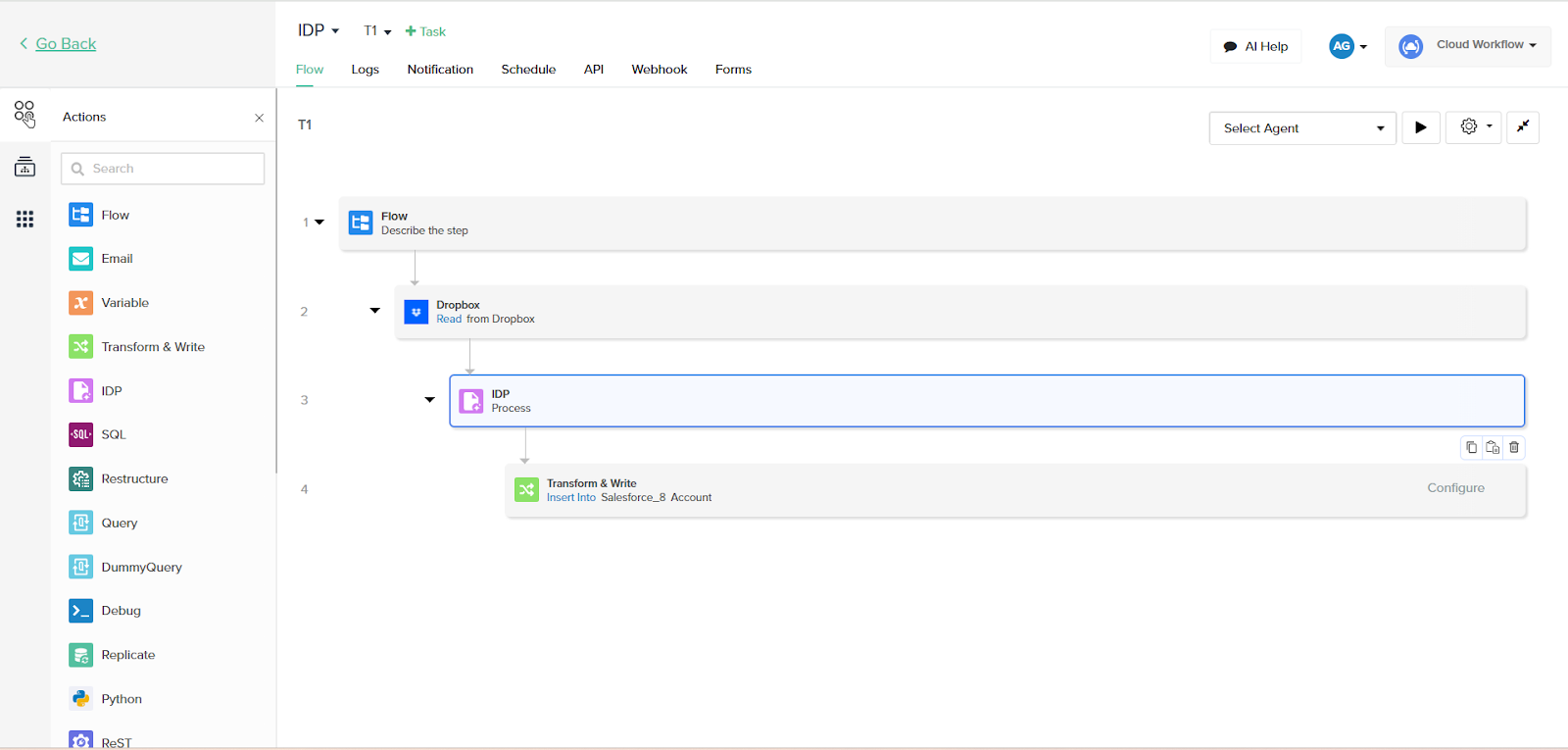

- With visual mapping and drag-and-drop configuration, you can easily modify the workflow operations.

- Enterprise-Grade Security: Encryption of data, controls on who can access it, and help with compliance

- Scalable Automation: Can handle hundreds of documents with little or no help from people

What does DBSync IDP do?

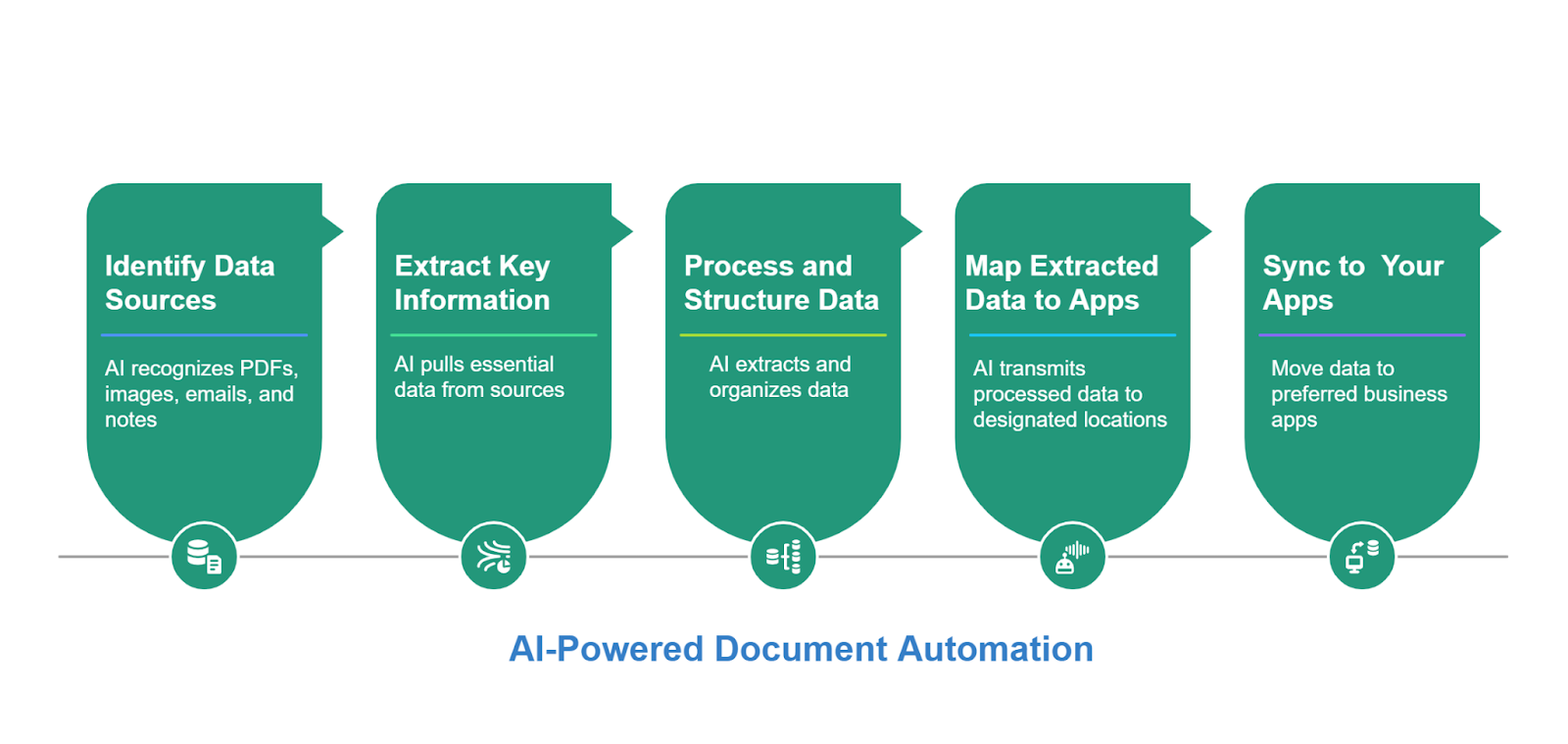

The magic of DBSync IDP is how it makes work easier and more efficient:

- Document Ingestion: You can upload documents or have them sent to you automatically from email, cloud storage, or other places.

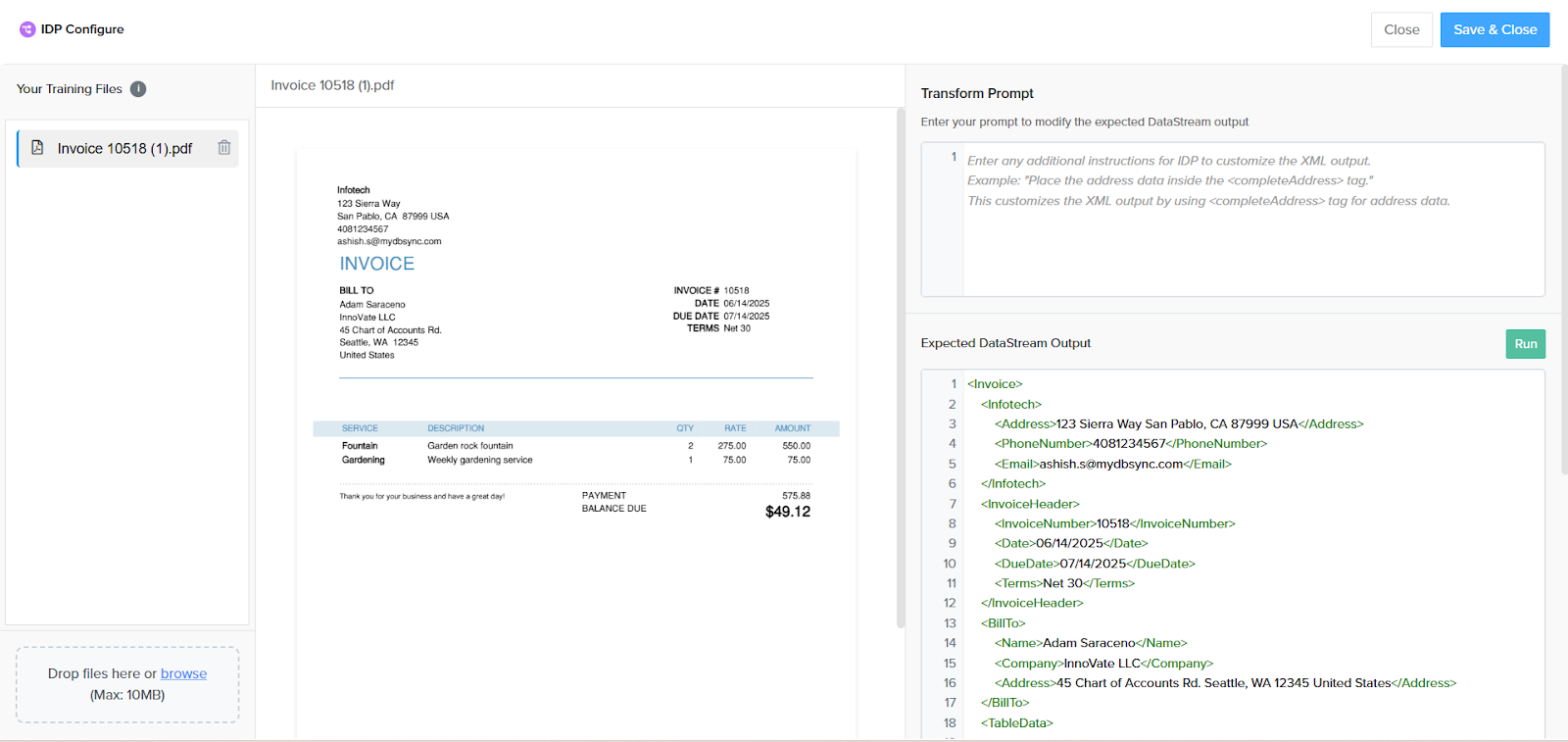

- Data Extraction: AI and OCR scan the document, identify important fields, and extract the necessary data.

- Data Structuring: Information is organized into structured formats, such as XML.

- Integration: You can easily send data to the systems you want, such as Salesforce, NetSuite, Dynamics 365, Business Central, and more.

- Automation and Alerts: Start workflows, send notifications, or obtain approvals when needed.

This process, from start to finish, makes your business run more smoothly, reduces errors, and eliminates the need for manual data entry.

Change the way your document operations work today

Imagine a manufacturing business that is growing quickly and gets hundreds of purchase orders and bills every week. They all come in different formats, such as PDFs sent by email or scanned images from mobile devices that are uploaded to the vendor portal. Previously, their finance team had to spend hours manually gathering important information, such as payment terms, PO numbers, and vendor names, and then re-entering that information into both their CRM and ERP systems. It took longer, made mistakes, and missed chances because it was done manually.

DBSync’s Intelligent Document Processing reads all incoming documents, regardless of format or channel, extracts and verifies the relevant data, and then seamlessly connects it to platforms such as Salesforce, Dynamics 365, or NetSuite. What went wrong? More time for the finance team to work on strategy instead of entering data over and over again, faster processing, and fewer mistakes.

The technology that makes IDP work

DBSync’s modern IDP solutions use new developments in AI and machine learning to push the limits of what can be done:

- Document Capture & Digitization: Changes paper and digital files into formats that computers can read.

- Image enhancement and Pre-processing: This process enhances the clarity of documents, enabling the accurate extraction of information.

- OCR and Text Recognition: Reads both printed and handwritten text so that you can search for and change information.

- Extraction Based on Machine Learning: This technology learns to identify and extract useful information, even from documents that are difficult to read or poorly organized.

- Natural Language Understanding: It determines the meaning of documents by examining their context, relationships, and purpose.

- Validation and Error Correction: Checks for errors and ensures the data is accurate before adding it to the system.

- Integration with Existing Systems: It links the data you obtain from other sources to how your business operates.

These changes enable DBSync IDP to quickly, accurately, and on a large scale handle even the most complex documents.

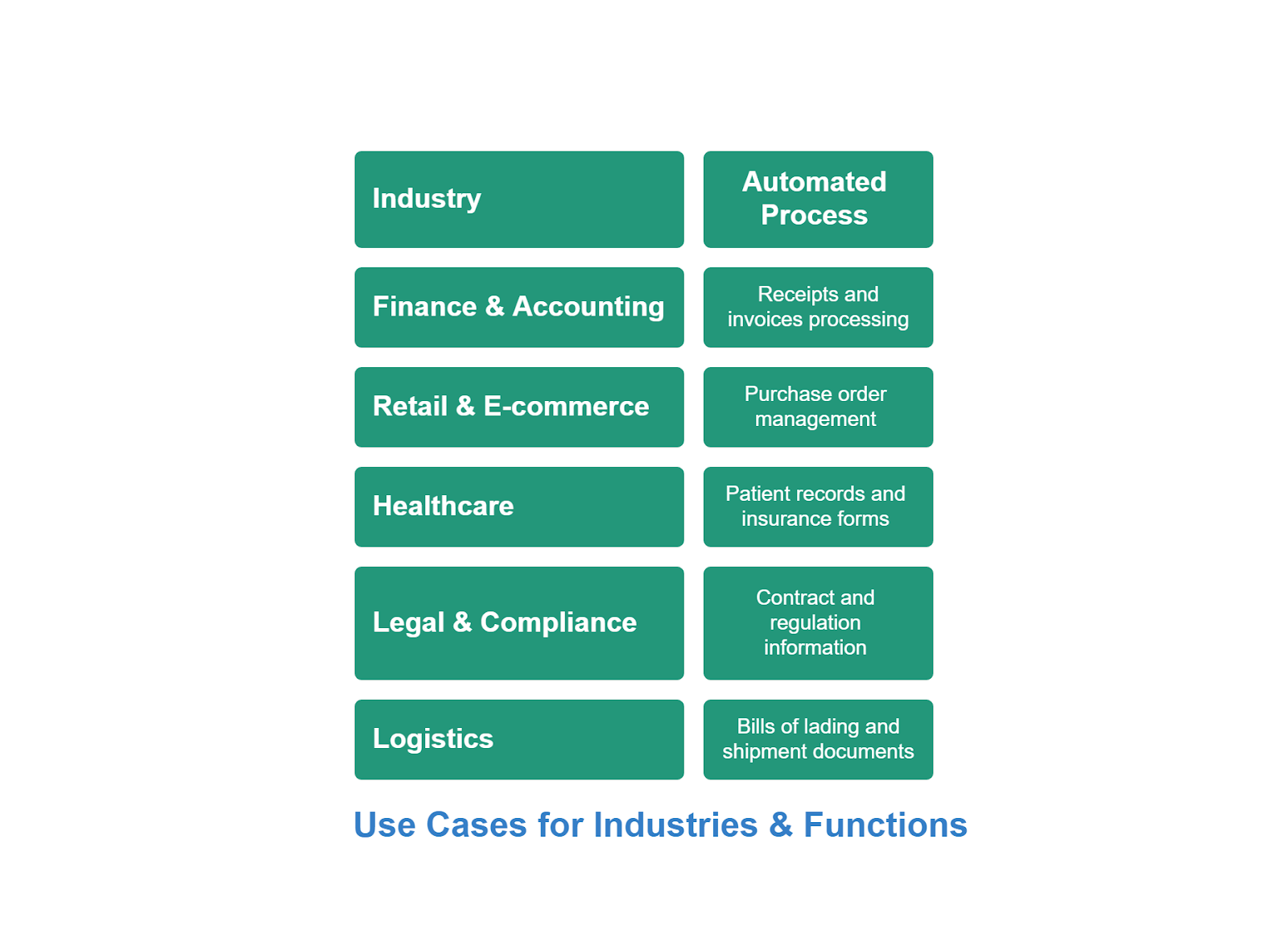

Use Cases

- Finance and Accounting: Automatically process receipts and invoices and sync with NetSuite and Dynamics 365.

- Retail and e-commerce: Adding purchase orders to Salesforce streamlines order management.

- Healthcare: Making sure that patient records and insurance forms are HIPAA-compliant while going digital.

- Legal and Compliance: Gathering information from contracts and regulations, and maintaining it securely.

- Logistics: Making the processes for bills of lading and shipment documents automatic.

In the real world, for instance, the finance team gets hundreds of invoices every month, and they come in different formats. With DBSync’s IDP Action, users can upload all their invoices at once, retrieve important information such as the vendor, amount, and due date, and automatically send this information to their accounting software. This saves time, reduces errors, and accelerates the payment process.

Frequently Asked Questions (FAQ)

Q: What kinds of documents can I use the IDP Action on?

A: You can upload and work with a wide range of file types, including PDFs, JPEGs, PNGs, scanned forms, receipts, and more.

Q: What business systems can I link to?

A: DBSync already integrates with Salesforce, Microsoft Dynamics 365 CRM, Business Central, NetSuite, and more than 50+ business apps.

Q: Is the IDP Implementation safe?

Yes, DBSync offers sufficient access controls, audit trails, and encryption for businesses.

Q: Is it possible for me to change how the extraction works?

A: Yes, of course. You can choose which data to extract and when you map the data.

Q: How does IDP scale with our business needs?

A: We can process hundreds of documents today based on your business needs and also work on an active roadmap for the product.

Q: What do I do with the data that was extracted from my documents?

A: You can map the structured XML output to any app or database in your integration workflow, which makes it easy to automate.

Q: Can I work on more than one document simultaneously?

A: We recommend processing one document at a time and templating it specifically to the kind of invoices you process.

Ready to transform your document workflows?

Don’t let manual document processing slow down your business. No matter where your data comes from or where it needs to go, DBSync Intelligent Document Processing can help you automate, connect, and speed up your most important workflows.

To learn more about DBSync’s Intelligent Document Processing solution, visit our website or contact our sales team to see how we can help transform your document workflows.